Distributed load testing on Kubernetes with Locust

This article is about how to run distributed load testing on Kubernetes with Locust. Locust is an open source load testing tool written in Python. It allows you to write test scenarios in Python code to simulate user behavior on your website or API. Locust is distributed and scalable, meaning that you can scale up to millions of concurrent users.

Introduction

As more and more users rely on online services, even a minor performance glitch can have a significant impact on user experience and business reputation. This is where load testing tools like Locust come into play, allowing us to simulate thousands, or even millions, of users accessing our applications concurrently.

In this article, we will dive deep into the world of distributed load testing on Kubernetes using Locust. We will explore the benefits of this approach, discuss the key components involved, and guide you through the process of setting up your own load testing environment. By the end, you’ll have the knowledge and tools to conduct comprehensive load tests on your Kubernetes-hosted applications, helping you proactively identify and address performance bottlenecks before they impact your users.

What is Locust?

Locust is an open source load testing tool written in Python. It allows you to write test scenarios in Python code to simulate user behavior on your website or API. Locust is distributed and scalable, meaning that you can scale up to millions of concurrent users.

Prerequisites

Before we get started, you’ll need to have the following prerequisites in place:

-

A running Kubernetes cluster with at least 2 nodes.

-

kubectl installed and configured to connect to your cluster.

-

Helm

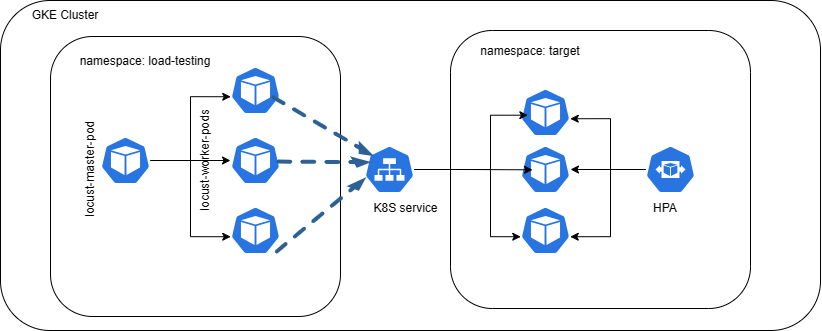

Architecture overview

we will be using the following architecture for our load testing environment:

Installing Locust on Kubernetes

To install Locust on Kubernetes, we will use the deliveryhero helm chart. Let’s first add the deliveryhero helm repository :

|

|

Create locustfile:

|

|

Install the chart:

Let’s first create configmap for our locustfile:

|

|

Then create a namespace for our load testing environment:

|

|

And then install the chart in the namespace locust:

|

|

The above command will create a locust master and 3 workers. The workers will be autoscaled based on the CPU utilization. The target host is <ip>:<port> of the application we want to load test.

Accessing the Locust UI

To access the Locust UI, we need to port forward the locust master service:

|

|

Then we can access the UI at http://<locust_ip>:8089. <locust_ip> is the external IP of the locust master service.

We should see something like this:

Overview of our python App

We will be using a simple python app to test our load testing environment. The app is a simple REST API that allows us to get a list of books, add a book to the cart, and purchase a book. The app is written in python using the FastAPI framework. Here’s the code of our app:

|

|

The app is then containerized and deployed to a container registry.

Our app is running on port

1097

Starting the load testing

Now that the app is deployed to kubernetes, we can start the load testing. we will be using the locust UI to launch the tests.

since we are running kubernetes locally, here’s the url of the locust UI: http://localhost:8089. Make sure you have port forwarded the locust master service as explained above.

If you are running kubernetes on a cloud provider, you can access the locust UI using the external IP of the locust master service.

in this example, we are simulating a load of 100 concurrent users. the spawn rate is 1 which means that 1 user will be created every second until it reaches the limit of 100 users. the load test will run for 10 minutes.

Analyzing the results

After some time, we can see the results of our load test:

The two charts above are the most important ones. The first one shows the number of requests per second. The second one shows the response time of each request. We can see that the response time is increasing as the number of requests per second increases. This means that the performance of our app decreases as the number of concurrent users increases.

Here are some tips to consider in order to make our app more efficient and scalable:

- Right-size you pods: Make sure that your pods have enough resources to handle the load.

- Use Horizental Pod Autoscaler: HPA allows you to scale your pods based on CPU utilization. You can also use custom metrics to scale your pods based on other metrics such as memory utilization.

- Use cluster autoscaler: Cluster autoscaler allows you to scale your cluster based on the number of pending pods. This is useful when you have a lot of pending pods due to insufficient resources.

- Use light-weight containers: Use light-weight containers such as Alpine Linux instead of Ubuntu or CentOS. This will reduce the memory footprint of your containers and make them more efficient.

- Use caching: Use caching to reduce the number of requests to your database. This will improve the performance of your app and reduce the load on your database.

- Use readiness and liveness probes: Use readiness and liveness probes to make sure that your pods are healthy and ready to serve traffic. This will reduce the number of failed requests and improve the performance of your app.

Cleaning up

When you finish the tests, clean up the environment by deleting the locust master and workers pods. Since we used helm to install locust, we will uninstall it using helm:

|

|

This will delete the locust master and workers pods. It will also delete the locust master and workers services. Finally, it will delete the locust namespace.

Conclusion

In this blog post, we have seen how to run distributed load testing on Kubernetes using Locust. We have also seen how to analyze the results of our load tests and how to make our app more efficient and scalable. I hope you found this article useful. If you have any questions or comments, please feel free to leave a comment below. Thank you for reading!